How we know we can trust the results from Ashes

This is one of the most common questions we get when we demo Ashes to the industry or to research groups. And rightly so! For someone to know they can trust the results from Ashes, we need to show that we do at least as well as other software, and as close as possible to the physical world.

The issue with software validation or verification is that the moment you have finished assessing how well your software performs, your report is already outdated. If you are adding new features or improving your existing ones, you'll need to have new tests, and a tiny change on a small part of the program can have the biggest unintended consequences on a totally different part. And after a few years it is even possible that the bit of code that was verified in the certification report does not exist anymore.

How we do verification and validation

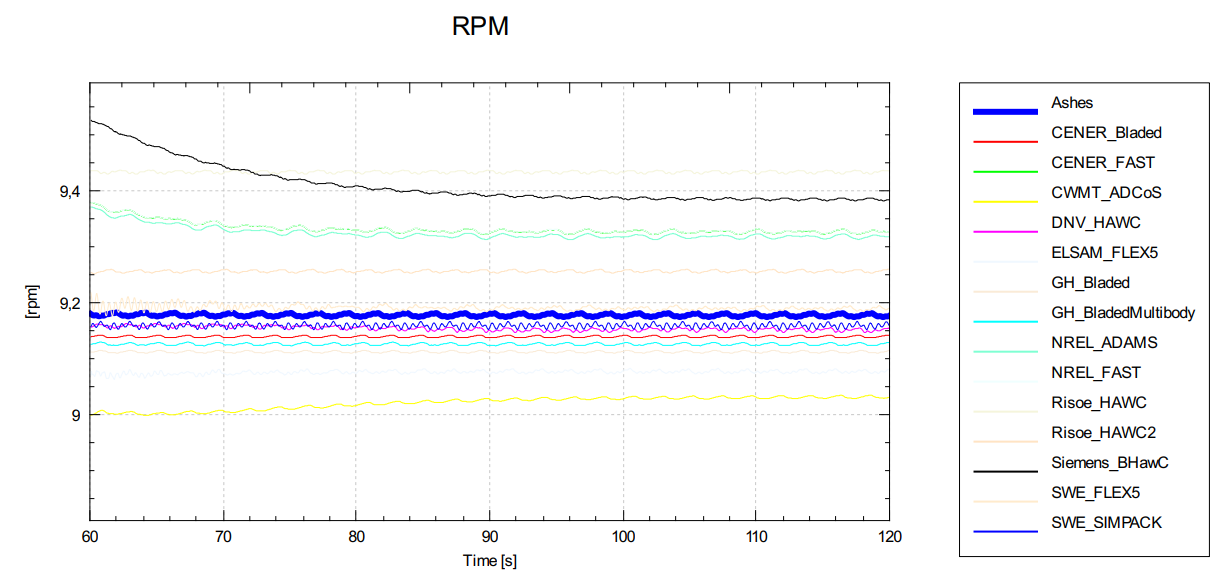

Our solution to this issue is automated, continuous and rigorous testing. We automatically run more than one thousand simulations every night and compare the results to different sets of data including

- analytical solutions

- experimental result

- output from other aeroelastic codes

- previous results from Ashes

If anything does not look the way it should, we get an automatic notification about which sensor of a given load case within a given batch has failed, and we can track the code modification that created the issue.

This not only means that we know our results are as accurate as possible on a daily basis, it also saves us an incredible amount of time for two reasons:

- it ensures we assess differences in results very early on, when the changes we have made to the code are still fresh and it’s easy to get back to what we’ve written.

- It prevents us from working around a buggy piece of code, which would mean that when we finally discover the error there might be more code to rewrite.

Being transparent is as important as producing accurate results

Probably one of the most important features of our benchmarking framework is that it is fully published and reproducible: every time we release a new version of Ashes, we upload the reports to our website so that anyone can see how the new version performs. And for every test, we provide a detailed explanation of what we have done, together with the models, files and assumptions that were used so that anyone can rerun the simulation and check that the results are correct.

So, “Have you run any benchmarks to validate Ashes?”

Yes, and chances are they might be rerunning as you read these lines.

To learn more about our benchmarking framework, you can

- check the reports for the latest Ashes version here.

- watch the video where we explain our benchmarking framework here.

Published 2023-03-29